Careers

We create games that are played for years and remembered forever.

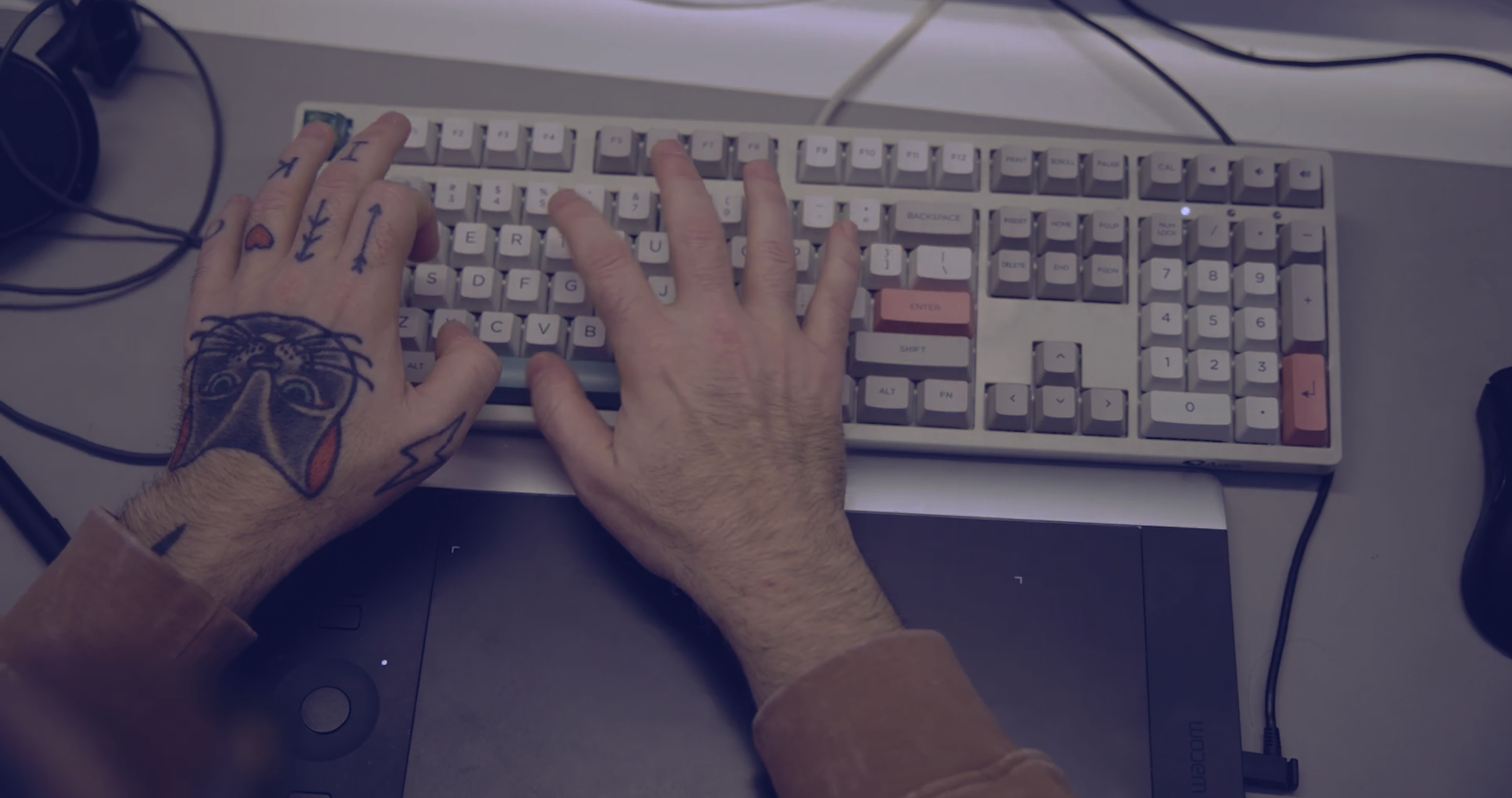

Why you might love it here

We believe games have the power to bring people around the world together and closer to each other. We work to create new, innovative, memorable experiences no one has played before. This is why we try to design games that excite wide and diverse player communities as well as to expand the audience for otherwise smaller “niche” game concepts.

If you love to think, talk, play and make games, Supercell is the place for you. We’ve built a company of proactive and independent teams with the freedom to do what they think is best for their players, our games and the company at large.

Featured Positions

Senior Game Designer, New Project

Senior Game Programmer, Brawl Stars

VFX Artist, Clash of Clans

Senior Data Scientist, Clash of Clans

Our offices

Our global teams are home to individuals from over 40 nationalities. We believe in transparency, open communication and spending time with one another. While all teams have their own unique traits, it’s the underlying culture, shared values and love for games that keep us together.

Joining Supercell

Our hiring process is designed to let you showcase your skills and get an understanding of what working with us would be like.

Moving Abroad?

We'll make your relocation as smooth as possible! Start by picturing yourself in Helsinki or Shanghai:

Open Positions

- Analytics Lead, Clash RoyaleHelsinkiTech & Analyticsonsitefull-time, permanent

- Senior Data Engineer, PlatformHelsinkiTech & Analyticsonsitefull-time, permanent

- Senior Animator, Brawl StarsHelsinkiGame Developmentonsitefull-time, permanent

- Senior Concept Artist, Brawl StarsHelsinkiGame Developmentonsitefull-time, permanent

- Senior Game ResearcherHelsinkiTech & Analyticsonsitefull-time, permanent

- Technical Artist, Clash of ClansHelsinkiGame Developmentonsitefull-time, permanent

- Platform Partnerships ManagerHelsinkiEntertainment & Partnershipsonsitefull-time, permanent

- Head of Data, Analytics, and InsightsHelsinkiTech & Analyticsonsitefull-time, permanent

- Project Manager, Live Operations and MonetizationHelsinkiBusiness & Operationsremotefull-time, permanent

- Compensation and Benefits ManagerHelsinkiPeopleonsitefull-time, permanent

- Senior CopywriterLos AngelesEntertainment & Partnershipshybridfull-time, permanent

- Marketing Data Analyst, Performance MarketingHelsinkiTech & Analyticsonsitefull-time, permanent

- Marketing Studio LeadHelsinkiMarketingonsitefull-time, permanent

- Senior Game Designer, New ProjectShanghaiGame Developmentonsitefull-time, permanent

- Senior Game Programmer, Brawl StarsHelsinkiGame Developmentonsitefull-time, permanent

- Licensing ManagerLos AngelesEntertainment & Partnershipshybridfull-time, permanent

- Senior VFX Artist, Squad BustersHelsinkiGame Developmentonsitefull-time, permanent

- VFX Artist, Clash of ClansHelsinkiGame Developmentonsitefull-time, permanent

- Marketing Lead, Supercell XHelsinkiMarketingonsitefull-time, permanent

- Performance Marketing Manager, Supercell XHelsinkiMarketingonsitefull-time, permanent

- Senior Application Security EngineerHelsinkiTech & Analyticsonsitefull-time, permanent

- Senior Data Scientist, Clash of ClansHelsinkiTech & Analyticsonsitefull-time, permanent

- Financial AnalystHelsinkiFinanceonsitefull-time, permanent

- Senior Game Data Analyst, Clash RoyaleHelsinkiTech & Analyticsonsitefull-time, permanent

- Game Design Lead, Clash of ClansHelsinkiGame Developmentonsitefull-time, permanent

- Performance Marketing ManagerHelsinkiMarketingonsitefull-time, permanent

- Lead 3D Environment Artist, mo.coHelsinkiGame Developmentonsitefull-time, permanent

- Senior Release Engineer, Clash of ClansHelsinkiGame Developmentonsitefull-time, permanent

- Marketing Producer, SC XHelsinkiMarketingonsitefull-time, permanent

- Combat Animator, New ProjectShanghaiGame Developmentonsitefull-time, permanent

- VFX Artist, New ProjectShanghaiGame Developmentonsitefull-time, permanent

- Live Operations & Monetization Lead, Brawl StarsHelsinkiBusiness & Operationsonsitefull-time, permanent

- Monetization Manager, Live Games and New GamesHelsinkiBusiness & Operationsonsitefull-time, permanent

- Senior Community Manager - Clash of Clans, Clash Royale or New GameHelsinkiCommunityonsitefull-time, permanent

- Live Ops Manager, Live and New GamesHelsinkiBusiness & Operationsonsitefull-time, permanent